‘Problematic quantification’ is not an expression I can claim to have heard in the past, and it’s certainly not one I’ve ever used myself.

‘Problematic quantification’ is not an expression I can claim to have heard in the past, and it’s certainly not one I’ve ever used myself.

However, at a recent meeting of The Parliamentary Advisory Council for Transport Safety (PACTS) the expression came up in a discussion about the review of the legal framework for automated vehicles being conducted by the Law Commission of England and Wales and the Scottish Law Commission. The consultation is open until 8th February. If you want to know more, details can be found on the Law Commission website

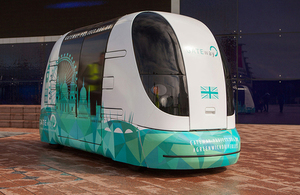

Automated ‘driver assistance features ‘currently on the market operate at what is known as ‘Level 2’ on the automated vehicle tech matrix. In layman’s terms that means drivers using them retain all their existing legal obligations. The tech includes such features as parking assist and lane departure warning systems

But the conversation at PACTS went far beyond Level 2. It envisaged ‘Level 4’ vehicles, those capable of ‘driving themselves’ for part of the journey and then handing over to a human driver. The handover might be planned – or unplanned after the vehicle has come to a safe stop.

This consultation poses numerous fascinating and sometimes very challenging issues. I think most people would agree that all automated vehicles that drive themselves should have a ‘user-in-charge’ who is able to operate the vehicle’s controls, unless the vehicle is specifically authorised to operate safely without one, and that the user-in-charge must be qualified and fit to drive.

It is suggested that a ‘user-in-charge’ would not necessarily be a driver while the automated system is engaged – and would not be liable for any criminal offences arising from the driving task. But should it be a criminal offence for a user-in-charge who is subjectively aware of a risk of serious injury, to fail to take reasonable steps to avert that risk?

The conversation also took in the question of automated vehicles and speeding. We know that the police currently have discretion to enforce speed limits. Guidelines indicate that fixed penalty notices are only appropriate when the speed exceeds the limit by at least 10% plus2 miles per hour.

The question then arises, should these tolerances also apply to automated vehicles: to maintain traffic flow? To prevent overly sharp braking when reaching a lower speed limit? or where it might be in the interests of safety (e.g. overtaking a vehicle quickly to avoid a collision)? Do we want to programme vehicles to stick exactly to the speed limit or should we build in some tolerances?

Then there’s driving on the footpath. Under the current law it is an offence for a vehicle to drive on a pavement. Section 34(4) of the Road Traffic Act 1988 recognises an exception for “saving life or extinguishing fire or meeting any other like emergency”. However, a similar offence under section 72 of the Highway Act1835 does not include any such exceptions.

Should an automated vehicle be programmed to mount the pavement indifferent circumstances, such as to avoid collisions; to allow emergency vehicles to pass; to enable traffic flow; in any other circumstances, for example.

Apparently, one of the most difficult challenges faced by automated vehicles is how to cope with groups of pedestrians blocking their path. The concern is that if automated vehicles always stop, and pedestrians know that they will always stop, they may take advantage of this and simply walk into the road. It begs the question as to what happens when they do that in front of a non-automated vehicle, of course!

Would it ever be acceptable for a highly automated vehicle to be programmed to ‘edge’ through pedestrians, if it meant that a pedestrian who does not move faces some small chance of being injured? If so, what could be done to ensure that edging only occurs in appropriate circumstances?

In other words, wold it be okay to programme autonomous vehicles to be able to run a few people down in order to make progress through a crowd of people, perhaps at a sporting event… and if, yes, how many?

Difficulty in defining a pre-arranged number of casualties is clearly a problematic quantification. In effect, too much of a problem to overcome and that sort of situation would require control of the automated vehicles to be passed to a ‘user-in-charge’; however, that might not necessarily be a person in the vehicle. The designated ‘user-in-charge’ could operate the vehicle remotely.

It’s food for thought!

Be First to Comment